freshidea - Fotolia

Memory management techniques improve system performance

Prior to using memory management strategies, admins should familiarize themselves with approaches to ensure they implement techniques suited to their specific circumstances.

Server virtualization relies on effective memory management to ensure optimal workload performance and resource utilization. However, managing memory in a virtual environment can be a complex process. For this reason, virtualization platforms provide various memory management techniques for controlling how IT administrators' systems allocate memory resources to individual VMs.

However, the differences between these techniques can be confusing, especially because they can overlap. To use them effectively, admins must consider their workload requirements and implement only the techniques suited to their particular circumstances. Memory management requires careful planning and long-range thinking in order to take full advantage of memory resources.

Memory ballooning

Memory ballooning enables a physical host to temporarily assign unused memory from one guest VM to another. Each VM runs a balloon driver that identifies unused memory and communicates this information to the hypervisor. If the hypervisor must allocate that memory to other VMs, the balloon driver locks the unused memory so it can't use it for its own processes. When the memory is no longer needed, the hypervisor notifies the balloon driver, which then unlocks the reserved memory so it's available for the VM.

Memory ballooning makes it possible to allocate more memory to the VMs than the host's total physical memory. It also transfers memory allocation decisions from the host to the VMs, where memory requirements can be better assessed. In addition, admins can use memory ballooning without disrupting workloads currently running. However, memory ballooning can create performance issues if the original VM no longer has the memory it requires. Memory ballooning might also fail to perform quickly enough to meet VM demands, especially if multiple VMs request memory simultaneously.

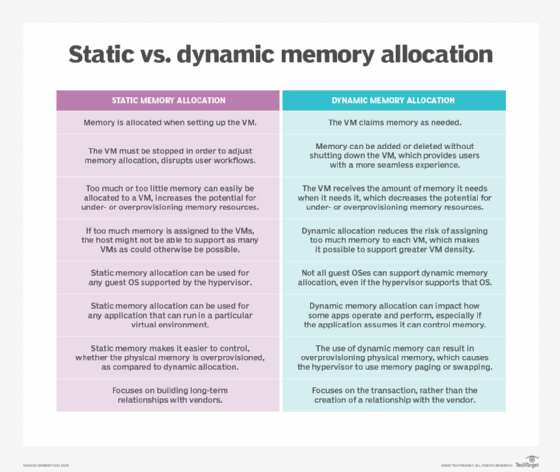

Dynamic memory allocation

Dynamic memory allocation makes it possible to automatically assign memory to running VMs and works similar to memory ballooning, except that admins don't assign static memory to the VMs. Rather, VMs claim the memory they require from a pool of physical resources, operating within specified threshold boundaries. The hypervisor continuously monitors and rebalances memory allocations as necessary to meet fluctuating workload demands.

When comparing dynamic memory allocation to static allocation, it's important for admins to know that dynamic memory allocation provides more flexibility than static allocation because the system can modify memory amounts while the VM is running. This is not the case with static allocation. Dynamic allocation also ensures that memory is sized correctly for the current workloads, helping to increase VM density while taking the guesswork out of memory assignment.

With dynamic memory allocation, admins can also overprovision memory; although this can lead to VMs running low on physical memory. With static allocation, admins have better control over memory distribution. In addition, not all OSes or applications can handle dynamic memory allocation.

Memory paging

In a virtual environment, the hypervisor manages the physical memory used by the VMs. If memory runs low, the hypervisor might use memory paging to compensate for the shortage. With memory paging, data is temporarily transferred from the host's memory to a hard disk drive or solid-state drive, which serves as an extension to the main memory. The sections of the drive used for memory are often referred to as page files.

Essentially, memory paging ensures that VMs will never run out of memory and, subsequently, crash. It also eliminates the need to add expensive memory modules when memory runs low. In addition, memory paging makes it possible for a host to run more VMs than might otherwise be possible. It can also address the limitations of a host system that supports only a specific amount of memory. However, too much memory paging can have a significant impact on application performance, so admins should only use it when more advanced memory management techniques are not available.

Memory overcommitment

Memory overcommitment is both a management technique and an operating condition. As a technique, overcommitment refers to the practice of allocating more total memory to a host's VMs than the amount of available physical memory. This strategy is possible because VMs don't typically use all their allocated memory at the same time. As an operating condition, overcommitment refers to the state that's reached when the actively consumed memory exceeds the available physical memory. In this case, memory is considered overcommitted.

To support this management technique, yet avoid the overcommitted state, virtualization platforms typically use memory ballooning or other advanced technologies to juggle available memory across VMs. Overcommitment as a memory management technique can lead to better memory utilization while supporting more VMs per host, leading to lower overall costs. However, if the VMs require most of their memory simultaneously, memory could reach an overcommitted state. As a result, the hypervisor will start swapping files with the storage drive, impacting workload performance across all VMs.

Memory mirroring

Memory mirroring divides a host's physical memory into two separate channels. Memory mirroring then copies one channel to the other channel to create a redundant copy of memory, which provides fault tolerance similar to RAID 1 storage. If the active channel fails, the memory controller switches over to the standby channel, avoiding workload disruptions while also providing a window for addressing the issue. Once the active channel is up and running, the system resynchronizes the two channels.

Memory mirroring provides a much higher degree of reliability than traditional memory configurations, making it well suited to mission-critical applications, especially those running in remote locations or places difficult to reach. However, the biggest downside to memory mirroring is the cost, which is double that of a typical configuration.

Memory swapping

During memory swapping, entire processes are temporarily swapped out of a system's memory to the storage disk space, similar to memory paging. However, memory paging is more efficient, in part, because it operates at the page level, whereas memory swapping moves the entire process, which consists of multiple pages. Hypervisors use memory swapping only as a last resort to address severe VM memory shortages when memory management techniques such as memory ballooning are no longer sufficient.

Memory swapping promises some of the same benefits of memory paging. For example, the host's VMs aren't in danger of running out of memory should they require their maximum memory simultaneously. Memory swapping also helps avoid overprovisioning of physical memory to support periodic spikes in memory usage.

However, swapping can severely impact application performance on all VMs, especially if the storage resources themselves are also working at maximum capacity. Because of this, admins should avoid memory swapping when possible.

Transparent page sharing

When multiple VMs on a host run the same OS, they often have identical memory pages. Transparent page sharing (TPS) provides a mechanism for condensing a set of identical pages into one page, and then providing multiple pointers to that page. TPS calculates hash values for each page to determine which are identical. If a VM needs to modify one of the pages, the hypervisor writes a new page and updates the pointer accordingly.

TPS targets small memory pages only, but it can still help consolidate memory and make it more available for VMs without investing in more physical memory. However, this assumes that there are enough duplicate pages to consolidate memory in a meaningful way.

TPS can also take time to get up and running because it must perform an initial scan of all the memory pages. In addition, TPS has led to some security concerns in the past, so admins should research this issue carefully before implementing TPS in their environments.