Getty Images/iStockphoto

Get familiar with the basics of vMotion live migration

VMware's vMotion simplifies the process of VM live migration. To use it properly, familiarize yourself with requirements for network bandwidth, host sizes and how it works.

VMotion enables you to migrate a live VM from one physical server to another. It means you don't require any downtime to perform vSphere host maintenance and it is also possible to, without any downtime, balance VMs on a cluster without the need to power off any of the VMs.

VMware also supports long distance vMotion and cross-vCenter vMotion, which lets you migrate workloads to another data center or the cloud. This allows for disaster avoidance when a threat is imminent, to run workloads in a specific time zone, and use cloud bursting if you start to run out of resources.

Before you implement vMotion, ensure you have enough memory and network bandwidth to support the migration process, your VMs are optimized for live migration and you know how the feature works across infrastructure.

VMotion live migration requirements

Almost every edition of vSphere contains vMotion; only the vSphere Essentials kit doesn't support the feature. VMotion is a process between two ESXi hosts, but it is initiated through vCenter, so it requires a vCenter license.

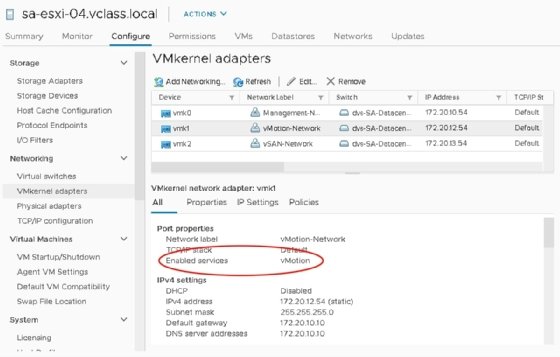

The rest of the requirements -- such as network bandwith and RAM -- are not very demanding. The migration is done between two ESXi hosts that must have a VMkernel interface with the vMotion feature enabled and can reach each other via network connection.

For the VMs you decide to migrate, the requirement is that they remain in the same layer 2 network. There is minimum bandwidth requirement of 250 Mbps per vMotion migration, but it is better to have more bandwidth available.

The more available bandwidth means you can perform migrations faster, which is the most important with VMs with large amounts of RAM.

How vMotion works

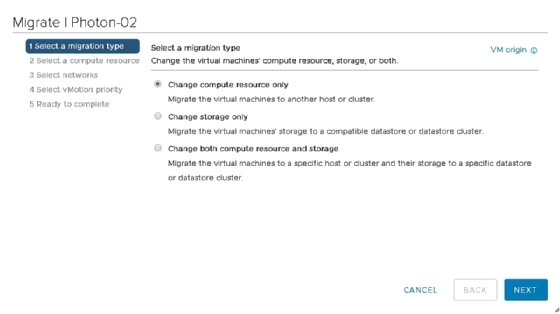

To move the VM to another physical computer, you must move the memory contents and switch any processing workflows to the other computer. VCenter initiates vMotion and starts the migration after a compatibility check with the source and destination ESXi host.

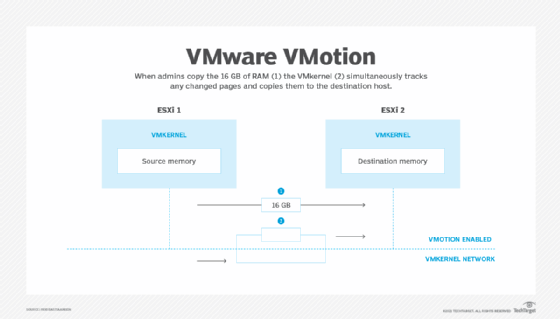

The process starts with an initial memory copy via the vMotion-enabled network. The image below shows where you must copy 16 GB of RAM for a VM with that required memory amount.

Ideally, this step would be instantaneous. But it takes several seconds to copy 16 GB, and during this process some memory pages in the source memory change. The VMkernel tracks what pages have changed and then copies them to the destination host.

Consider this would be another gigabyte of RAM. While you copy that 16 GB, the memory changes again. This becomes an iterative process, as shown in the image below.

After several iterations, you should have copied most memory and there is an amount left that can be copied in less than 500 milliseconds. If you can reach that point because of the rapid changing source RAM, then the VM will slow down on the source with Stun During Page Send to make it possible to copy all memory.

Sufficient bandwidth is the most important requirement for large VMs. For example, to copy a 768 GB VM over a 1 Gbps link takes too long to ever reach a stable switchover point.

It's a good idea to have a dedicated vMotion network with 10 Gbps or more and multiple network adapters. Multiple VMkernel ports are also recommended if you perform many migrations simultaneously or migrate large VMs.

Once you copy the memory, the instruction processing stops on the source ESXi host and continues on the destination ESXi host. From the guest OS' perspective, nothing happened because all instructions are processed, but on another host.

You need action on the network because network traffic should be sent to the destination ESXi host -- not the source ESXi host. The destination ESXi host sends a reversed address resolution protocol packet to the physical switch, which triggers the MAC learning process to list the VM's MAC address on the outgoing port where you connect the ESXi host.

Now the workload is seamlessly migrated, and you can safely say that you can move any TCP-enabled application without interruption.

Enhancements and considerations

Over the years, most improvements to vMotion were implemented because of ever-increasing VM specifications. This is the case with vSphere 7, where in update 2 you can configure a VM with a maximum of 896 vCPUs and 24 TB of RAM.

VMware made important improvements in vSphere 7 U1 to how the VMkernel initiates the process that tracks which memory pages change during the memory copy process.

The Loose Page Trace Install feature eliminates the need to stall all vCPUs when you insert a page tracer. It dedicates one vCPU to perform this process so all other vCPUs can continue to execute guest OS instructions.

Another enhancement is that the VM process is already switched over before you move all memory to its destination. The memory on the other host is marked as remote and is fetched later.

If you properly design and size your hosts and networking components, then there are no major disadvantages to vMotion.

There can be an effect on overall performance if you run continuous migrations in a cluster. In that case, evaluate and optimize cluster sizing and setup for vMotion. You can set up the Distributed Resource Scheduler that automates vMotion to a more conservative threshold.