A beginner's guide to hosted and bare-metal virtualization

Choosing a virtualization approach isn't a matter of competition. Admins must consider their personal use cases to determine which hypervisor type suits their needs.

Virtualization has become a ubiquitous, end-to-end technology for data centers, edge computing installations, networks, storage and even endpoint desktop systems. However, admins and decision-makers should remember that each virtualization technique differs from the others. Bare-metal virtualization is clearly the preeminent technology for many IT goals, but hosted hypervisor technology works better for certain virtualization tasks.

By installing a hypervisor to abstract software from the underlying physical hardware, IT admins can increase the use of computing resources while supporting greater workload flexibility and resilience. Take a fresh look at the two classic virtualization approaches and examine the current state of both technologies.

What is bare-metal virtualization?

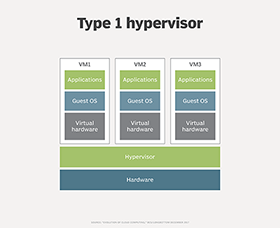

Bare-metal virtualization installs a Type 1 hypervisor -- a software layer that handles virtualization tasks -- directly onto the hardware prior to the system installing any other OSes, drivers or applications. Common hypervisors include VMware ESXi and Microsoft Hyper-V. Admins often refer to bare-metal hypervisors as the OSes of virtualization, though hypervisors aren't OSes in the traditional sense.

Once admins install a bare-metal hypervisor, that hypervisor can discover and virtualize the system's available CPU, memory and other resources. The hypervisor creates a virtual image of the system's resources, which it can then provision to create independent VMs. VMs are essentially individual groups of resources that run OSes and applications. The hypervisor manages the connection and translation between physical and virtual resources, so VMs and the software that they run only use virtualized resources.

Since virtualized resources and physical resources are inherently bound to each other, virtual resources are finite. This means the number of VMs a bare-metal hypervisor can create is contingent upon available resources. For example, if a server has 24 CPU cores and the hypervisor translates those physical CPU cores into 24 vCPUs, you can create any mix of VMs that use up to that total amount of vCPUs -- e.g., 24 VMs with one vCPU each, 12 VMs with two vCPUs each and so on. Though a system could potentially share additional resources to create more VMs -- a process known as oversubscription -- this practice can lead to undesirable consequences.

Once the hypervisor creates a VM, it can configure the VM by installing an OS such as Windows Server 2019 and an application such as a database. Consequently, the critical characteristic of a bare-metal hypervisor and its VMs is that every VM remains completely isolated and independent of every other VM. This means that no VM within a system shares resources with or even has awareness of any other VM on that system.

Because a VM runs within a system's memory, admins can save a fully configured and functional VM to a disk where they can then back up and reload the VM onto the same or other servers in the future, or duplicate it to invoke multiple instances of the same VM on other servers in a system.

Advantages and disadvantages of bare-metal virtualization

Virtualization is a mature and reliable technology; VMs provide powerful isolation and mobility. With bare-metal virtualization, every VM is logically isolated from every other VM, even when those VMs coexist on the same hardware. A single VM can neither directly share data with or disrupt the operation of other VMs nor access the memory content or traffic of other VMs. In addition, a fault or failure in one VM does not disrupt the operation of other VMs. In fact, the only real way for one VM to interact with another VM is to exchange traffic through the network as if each VM is its own separate server.

Bare-metal virtualization also supports live VM migration, which enables VMs to move from one virtualized system to another without halting VM operations. Live migration enables admins to easily balance server workloads or offload VMs from a server that requires maintenance, upgrades or replacements. Live migration also increases efficiency compared to manually reinstalling applications and copying data sets.

However, the hypervisor itself poses a potential single point of failure (SPOF) for a virtualized system. But virtualization technology is so mature and stable that modern hypervisors, such as VMware ESXi 7, notoriously lack such flaws and attack vectors. If a VM fails, the cause probably lies in that VM's OS or application, rather than in the hypervisor.

What is hosted virtualization?

Hosted virtualization offers many of the same characteristics and behaviors as bare-metal virtualization. The difference comes from how the system installs the hypervisor. In a hosted environment, the system installs the host OS prior to installing a suitable hypervisor -- such as VMware Workstation, KVM or Oracle VirtualBox -- atop that OS.

Once the system installs a hosted hypervisor, the hypervisor operates much like a bare-metal hypervisor. It discovers and virtualizes resources and then provisions those virtualized resources to create VMs. The hosted hypervisor and the host OS manage the connection between physical and virtual resources so that VMs -- and the software that runs within them -- only use those virtualized resources.

However, with hosted virtualization, the system can't virtualize resources for the host OS or any applications installed on it, because those resources are already in use. This means that a hosted hypervisor can only create as many VMs as there are available resources, minus the physical resources the host OS requires.

The VMs the hypervisor creates can each receive guest OSes and applications. In addition, every VM created under a hosted hypervisor is isolated from every other VM. Similar to bare-metal virtualization, VMs in a hosted system run in memory and the system can save or load them as disk files to protect, restore or duplicate the VM as desired.

Hosted hypervisors are most commonly used in endpoint systems, such as laptop and desktop PCs, to run two or more desktop environments, each with potentially different OSes. This can benefit business activities such as software development.

In spite of this, organizations use hosted virtualization less often because the presence of a host OS offers no benefits in terms of virtualization or VM performance. The host OS imposes an unnecessary layer of translation between the VMs and the underlying hardware. Inserting a common OS also poses a SPOF for the entire computer, meaning a fault in the host OS affects the hosted hypervisor and all of its VMs.

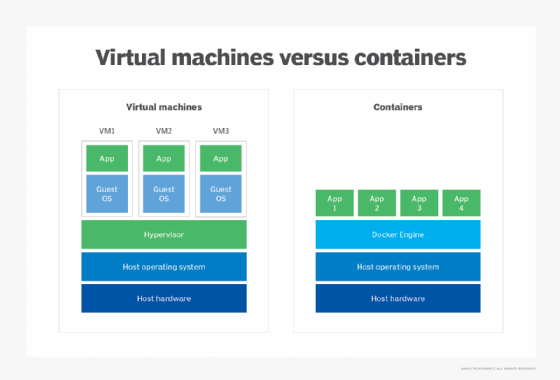

Although hosted hypervisors have fallen by the wayside for many enterprise tasks, the technology has found new life in container-based virtualization. Containers are a form of virtualization that relies on a container engine, such as Docker, LXC or Apache Mesos, as a hosted hypervisor. The container engine creates and manages virtual instances -- the containers -- that share the services of a common host OS such as Linux.

The crucial difference between hosted VMs and containers is that the system isolates VMs from each other, while containers directly use the same underlying OS kernel. This enables containers to consume fewer system resources compared to VMs. Additionally, containers can start up much faster and exist in far greater numbers than VMs, enabling for greater dynamic scalability for workloads that rely on microservice-type software architectures, as well as important enterprise services such as network load balancers.

Container engines are a variation on hosted hypervisor technology, but containers and VMs are not directly interchangeable. Still, the fundamental concepts of hosted virtualization apply to containers. You can also mix container and VM technologies in a single environment. For example, you can create a Type 1 VM and then load that Type 1 VM onto a Linux OS, Docker or other container instance. This means admins can create a container deployment within a traditional VM to reap the benefits of both technologies.

What are the advantages and disadvantages of hosted virtualization?

Hosted virtualization promises the same basic benefits found in bare-metal virtualization. A hosted hypervisor can create well-isolated VMs and some -- though, not all -- hosted hypervisors provide support for VM migration between other virtualized systems.

The main disadvantage to hosted virtualization is potential degradation in VM performance, which usually shows up as increased latency within VMs and their workloads. This performance comes as a result of the common OS, which imposes an additional layer of translation between the VMs and the underlying system hardware.

A hosted hypervisor can be a bit simpler and less expensive than bare-metal hypervisor versions because the hosted hypervisor can defer some tasks to the underlying OS. Still, the performance penalty usually limits the number of VMs that the hypervisor can support simultaneously on the system. In addition, the presence of a host OS itself poses a potential SPOF for the virtualized system. Given the frequent patches and updates of some OSes and the many diverse builds of others, the potential for OS faults can affect the entire system, as well as the hypervisor and all of its VMs.

The rise of container-based virtualization poses slightly different pros and cons. Containers use a variation of hosted virtualization where admins install a container engine on a host OS. A container engine can use the services and capabilities of the host OS, and the containers can forego an OS in favor of the shared OS kernel.

Containers can be extremely small and agile. They exist basically as code and dependencies, so admins can start them up quickly and run them in high volumes on a system. In addition, containers can easily and quickly migrate between systems running compatible container engines. This makes containers particularly useful when admins require rapid scalability.

However, containers share a common OS kernel. As a result, all of the containers on that system share the same flaws and performance limitations of that OS kernel. If the OS kernel crashes or the system exploits a security flaw, this affects every single container running on that OS kernel. Many admins avoid this potential problem by creating a container environment with a conventional VM, thus using the VM's isolation to contain and limit any vulnerabilities that containers might pose.

The final verdict: Bare-metal vs. hosted virtualization

The verdict isn't about which type of virtualization is necessarily better. Rather, admins should match the virtualization technology with their particular use case. Bare-metal virtualization, hosted virtualization and container virtualization can all find a meaningful place together.

Bare-metal virtualization works best in the data center for admins looking to maximize the use of server resources by running two or more VMs on the same system. Admins who use bare-metal virtualization gain the benefits of VM logical isolation, mobility and performance.

Hosted virtualization has a place on endpoint computers. It enables laptop or desktop computers to run two or more VMs -- in most cases, those VMs represent different OSes and desktop configurations. For example, one VM might run a Windows 10 desktop, while another VM might run a macOS desktop. Software developers creating and testing software on systems that might not otherwise support the native OS might find hosted virtualization particularly useful. The use of virtualization avoids the expense and hassle of purchasing and maintaining multiple physical endpoints.

Container technology is still maturing, but it has found a place in the data center to enable complex, dynamic, highly scalable services and next-generation software architectures. These technologies don't often work efficiently with a traditional monolithic software approach reflected in conventional VMs.